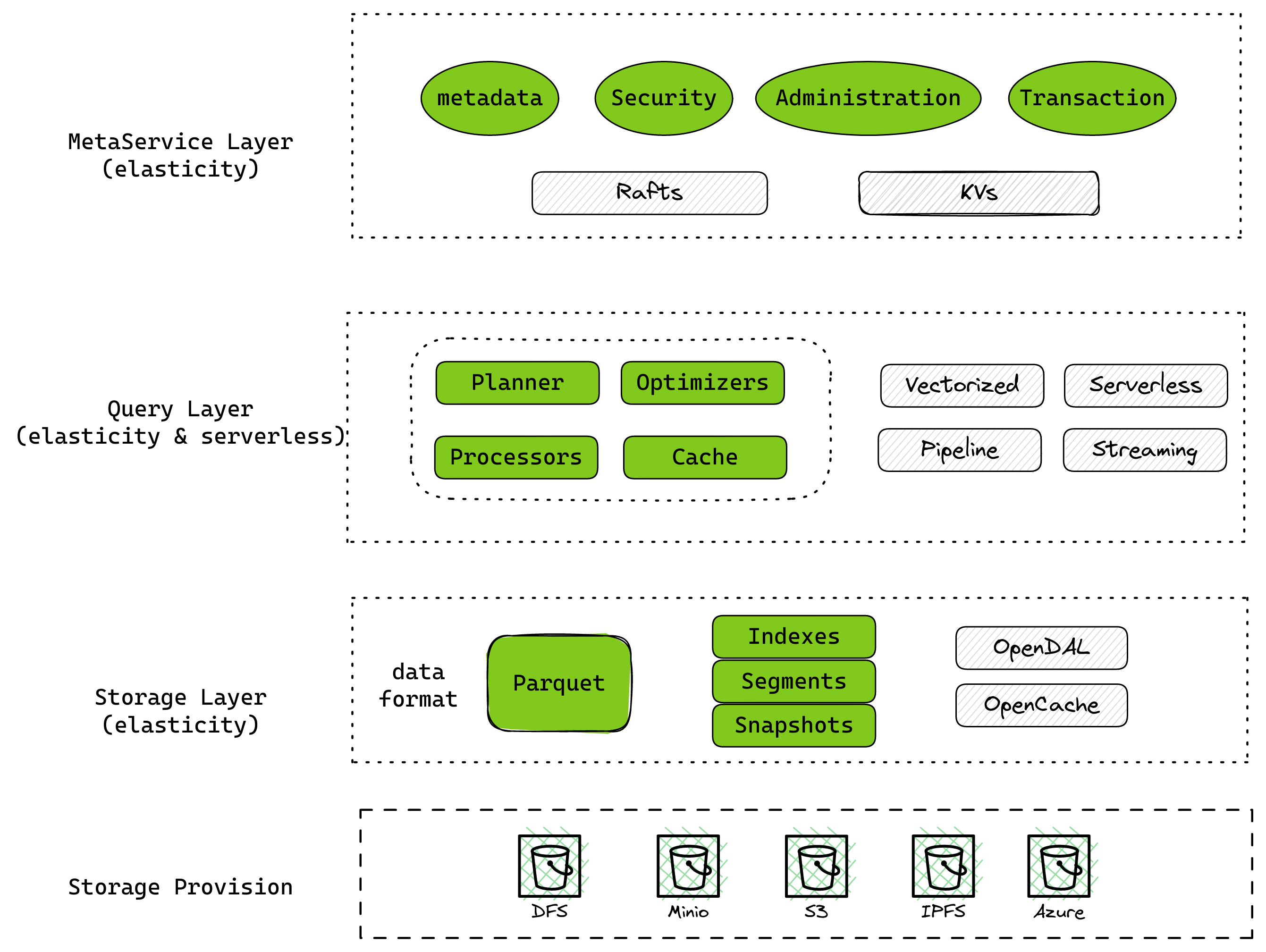

Databend is a modern cloud data warehouse, serving your massive-scale analytics needs at low cost and complexity. Open source alternative to Snowflake. Also available in the cloud: https://app.databend.com .

What's New

Check out what we've done this week to make Databend even better for you.

Features & Improvements ✨

Meta

- add databend-meta config

grpc_api_advertise_host(#9835)

AST

Expression

- add

Decimal128andDecimal256type (#9856)

Functions

- support

array_indexof(#9840) - support array function

array_unique,array_distinct(#9875) - support array aggregate functions (#9903)

Query

- add column id in TableSchema; use column id instead of index when read and write data (#9623)

- support view in

system.columns(#9853)

Storage

ParquetTablesupport topk optimization (#9824)

Sqllogictest

- leverage sqllogictest to benchmark tpch (#9887)

Code Refactoring 🎉

Meta

- remove obsolete meta service api

read_msg()andwrite_msg()(#9891) - simplify

UserAPIandRoleAPIby introducing a methodupdate_xx_with(id, f: FnOnce)(#9921)

Cluster

- split exchange source to reader and deserializer (#9805)

- split and eliminate the status for exchange transform and sink (#9910)

Functions

- rename some array functions add

array_prefix (#9886)

Query

TableArgspreserve info of positioned and named args (#9917)

Storage

ParquetTablelist file inread_partition(#9871)

Build/Testing/CI Infra Changes 🔌

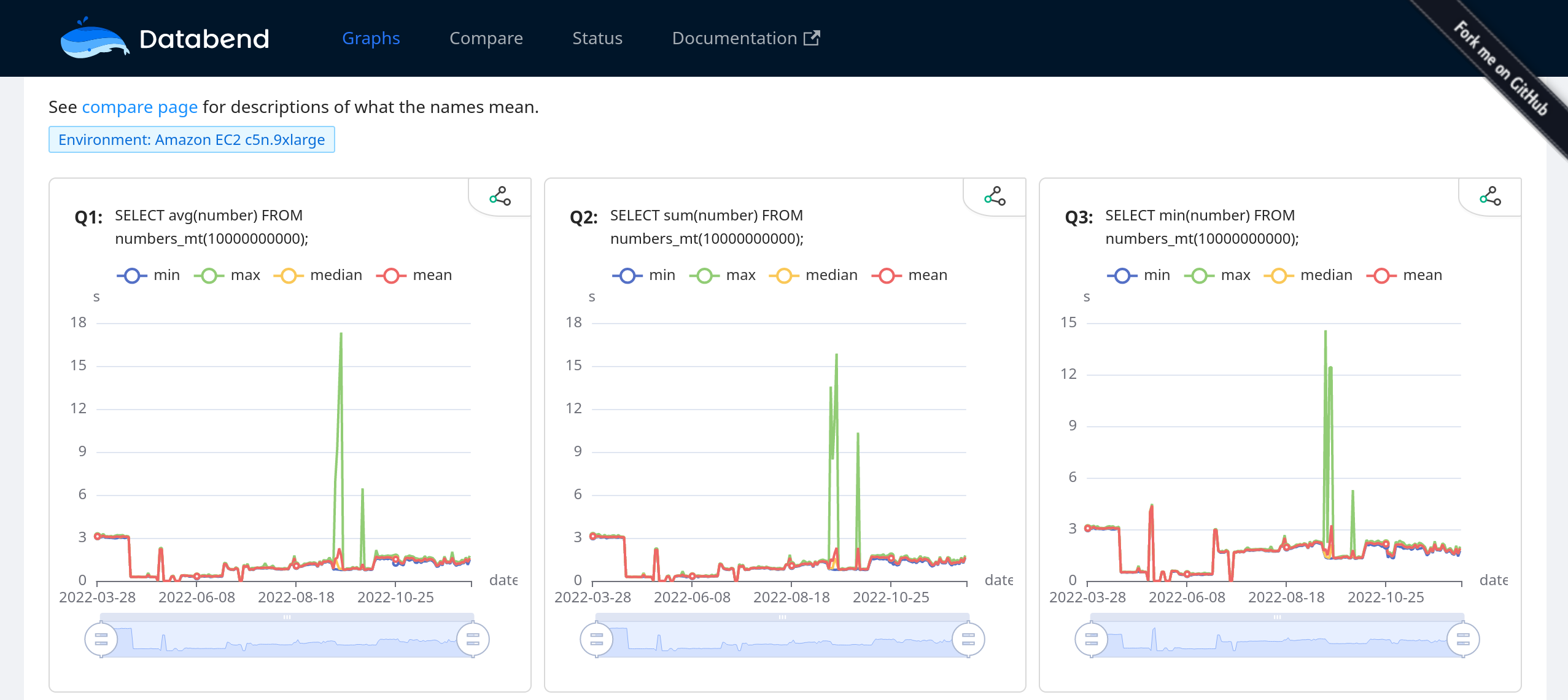

- support for running benchmark on PRs (#9788)

Bug Fixes 🔧

Functions

- fix nullable and or domain cal (#9928)

Planner

- fix slow planner when ndv error backtrace (#9876)

- fix order by contains aggregation function (#9879)

- prevent panic when delete with subquery (#9902)

Query

- fix insert default value datatype (#9816)

What's On In Databend

Stay connected with the latest news about Databend.

Why You Should Try Sccache

Sccache is a ccache-like project started by the Mozilla team, supporting C/CPP, Rust and other languages, and storing caches locally or in a cloud storage backend. The community first added native support for the Github Action Cache Service to Sccache in version 0.3.3, then improved the functionality in v0.4.0-pre.6 so that the production CI can now use it.

Now, opendal, open-sourced by Datafuse Labs, acts as a storage access layer for sccache to interface with various storage services (s3/gcs/azlob etc.).

Learn More

What's Up Next

We're always open to cutting-edge technologies and innovative ideas. You're more than welcome to join the community and bring them to Databend.

Try using build-info

To get information about git commits, build options and credits, we now use vergen and cargo-license.

build-info can collect build-information of your Rust crate. It might be possible to use it to refactor the relevant logic in common-building.

pub struct BuildInfo {

pub timestamp: DateTime<Utc>,

pub profile: String,

pub optimization_level: OptimizationLevel,

pub crate_info: CrateInfo,

pub compiler: CompilerInfo,

pub version_control: Option<VersionControl>,

}

Issue 9874: Refactor: Try using build-info

Please let us know if you're interested in contributing to this issue, or pick up a good first issue at https://link.databend.rs/i-m-feeling-lucky to get started.

Changelog

You can check the changelog of Databend Nightly for details about our latest developments.

- v0.9.30-nightly

- v0.9.29-nightly

- v0.9.28-nightly

- v0.9.27-nightly

- v0.9.26-nightly

- v0.9.25-nightly

- v0.9.24-nightly

- v0.9.23-nightly

- v0.9.22-nightly

Contributors

Thanks a lot to the contributors for their excellent work this week.

| andylokandy | ariesdevil | b41sh | BohuTANG | dependabot[bot] | drmingdrmer |

| everpcpc | flaneur2020 | johnhaxx7 | leiysky | lichuang | mergify[bot] |

| PsiACE | RinChanNOWWW | soyeric128 | sundy-li | TCeason | Xuanwo |

| xudong963 | youngsofun | zhang2014 |

Connect With Us

We'd love to hear from you. Feel free to run the code and see if Databend works for you. Submit an issue with your problem if you need help.

DatafuseLabs Community is open to everyone who loves data warehouses. Please join the community and share your thoughts.

- Databend Official Website

- GitHub Discussions (Feature requests, bug reports, and contributions)

- Twitter (Stay in the know)

- Slack Channel (Chat with the community)